How Azure Machine Learning and Web Apps help Nexon to improve its game AI

Nexon delivers online game entertainment and is a market leader in providing fun and deeply immersive content to a worldwide audience. In a hackfest with Microsoft, Nexon worked on ways to improve artificial intelligence to make the game experience more captivating for players.

The hack team included the following:

- Kitae Noh – G5, Nexon Korea

- Jaesuck Kim – E1, Nexon Korea

- Junghoon Cho – Lead Programmer, Nexon Korea

- Jubok Kim – Technical Director, Nexon Korea

- Yeram Sim – Associate Programmer, Nexon Korea

- Cheonseob Hwang – Associate Programmer, Nexon Korea

- Sunghee Lee – Managing Director, DS-eTrade

- Hwanduck Kim – Director, DS-eTrade

- Daeseong Myung – Deputy General Manager, DS-eTrade

- Goen Park – Assistant Manager, DS-eTrade

- Ye Jin Koo – Audience Evangelism Manager, Microsoft

- Dae Woo Kim – Senior Technical Evangelist, Microsoft

- Hyewon Ryu – Audience Evangelism Manager, Microsoft

- Eunji Kim – Technical Evangelist, Microsoft

Customer profile

Nexon Korea Corporation is the No. 1 game company in Korea in terms of revenue. Established in 1994, Nexon is a pioneer and global leader in the MMORPG (Massive Multiplayer Online Role Playing Game) industry. It has grown into an international company with the establishment of several branches: Nexon Japan, Nexon America, and Nexon Europe. It is aggressively establishing a presence in the global market with high-quality games and services, serving 1.4 billion users in more than 110 countries.

Nexon has developed and published more than 50 world-class PC online games and 30 mobile games in a series and is currently developing 20+ mobile projects with heavy financial investment.

Pain point or problem area to be addressed

Thanks to the success of the Mabinogi MMORPG, Nexon published a trading card game (TCG) with Mabinogi intellectual property named Mabinogi Duel, available from Google Play and the App Store.

For a TCG, artificial intelligence (AI) is the most important part when it comes to player versus environment (PvE) play. Most of the game’s players leave the play queue because the AI is no fun and the typical play pattern makes the game boring. Nexon needs refined solutions to improve the game AI, such as scalable real-time web service and batch analytics to reduce the customer churn rate and to increase the profit.

Solution, steps, and delivery

Nexon needed to improve the game AI and scalable real-time web service, such as game card deck suggestions and batch tasks to predict customer churn rate.

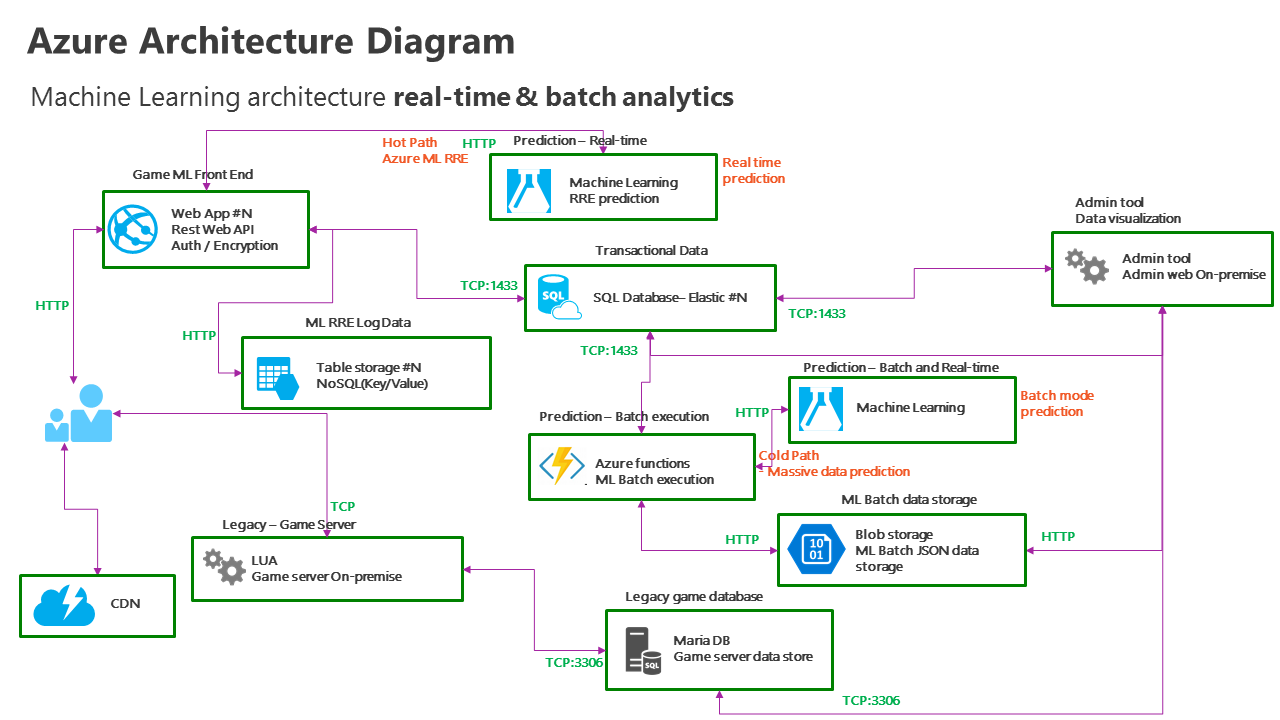

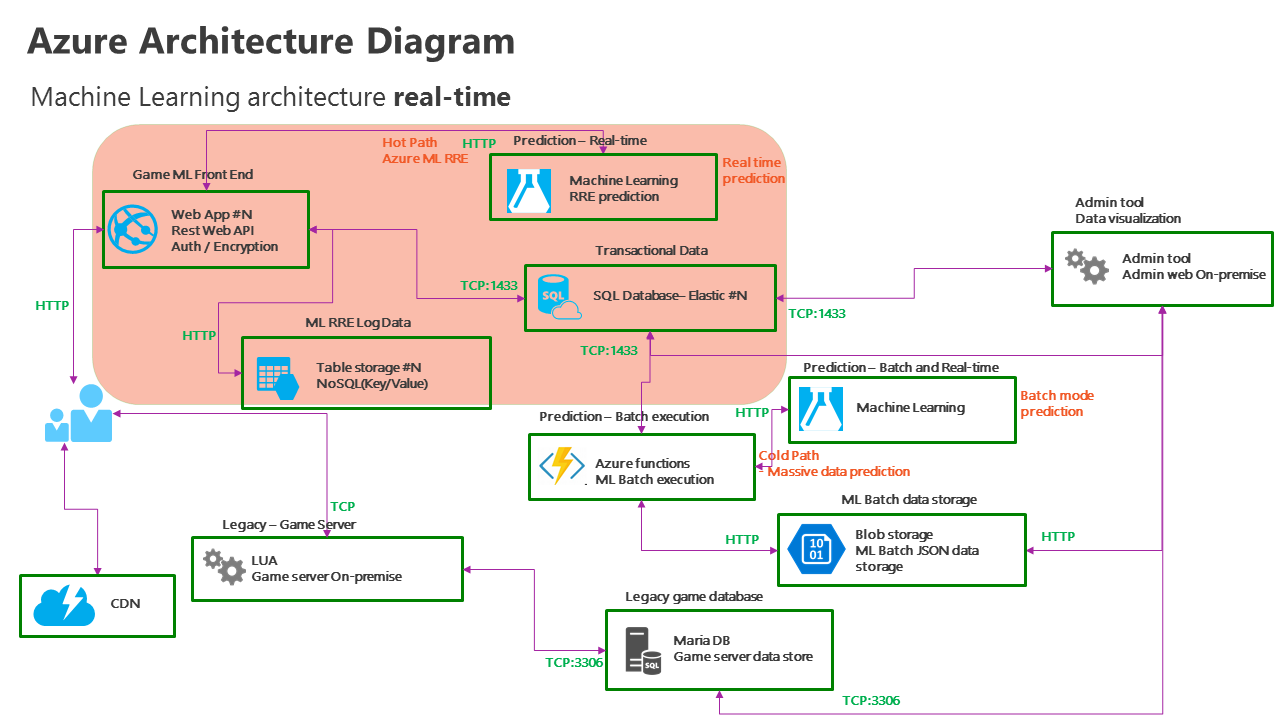

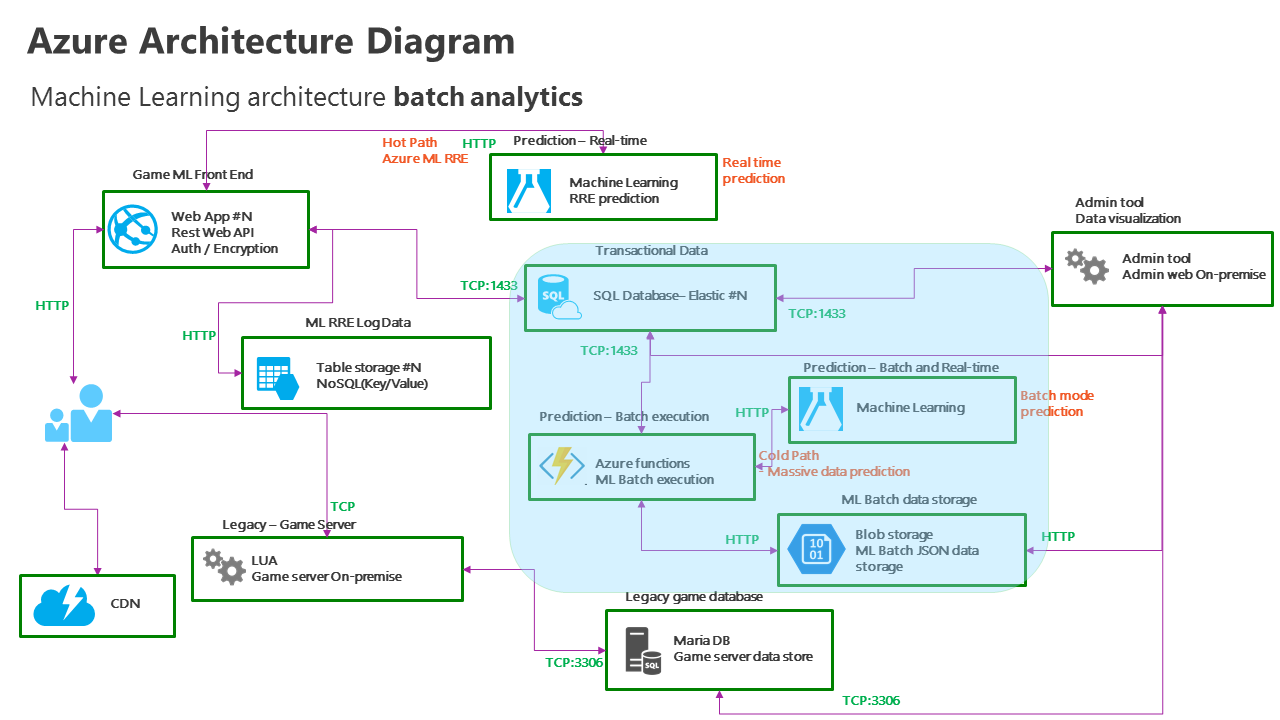

So, we proposed Azure Machine Learning analytics and the Web Apps feature of Azure App Service as a complex gameplay front-end RESTful API, which helps to implement authentication and massive Azure Machine Learning API call for a scalable service. We also proposed Azure Functions as a batch analytics task scheduler processor.

The Nexon Mabinogi Duel team and Microsoft created an Azure-based solution architecture. We were able to achieve real-time analytics and schedule batch analytics results by using Web Apps and Azure Functions.

Real-time predicted analytics with Web Apps

To implement real-time analytics, the hackfest team adopted flexible Web Apps for the front end because the Nexon mobile dev team was using C#, Node.js, and other languages and frameworks. Furthermore, since auto-scale and easy deployment (continuous deployment) are the most necessary features for the Nexon mobile dev team, Web Apps seemed to be the best choice.

GitHub repository: https://github.com/m-duel-project/frontend-realtime

The basic role of the front-end web app is separated from the on-premises LUA game server, receiving real-time analytics requests and handling requests for Azure Machine Learning predictive analytics.

This service controls the access and checks basic authentication and AES-encrypted JSON data that is processed as RESTful API service. This service was implemented with ASP.NET Web API during the hackfest. Web API performs the stages listed next.

Role of front-end web app

- Request/response to Azure Machine Learning predictive analytics

- Store predicted data on NoSQL – Azure Table storage

- Save predicted data on RDBMS – Azure SQL Database

Azure Machine Learning model repository: https://github.com/m-duel-project/azure-hands-on/blob/master/docs/azure_ml_tutorial.md

We designed the service to receive real-time prediction requests from the gameplayer device, send it to Azure Machine Learning, store predicted results on Azure Table storage for log purposes, and lastly, save the predicted data on relational database management system (RDBMS) - Azure SQL Database.

Azure Table storage is key/value-based NoSQL storage used not just for C# but also for Node.js and other languages as easy proxy APIs. Azure Table storage was the best option for log analytics and log data storage, as it is persistent and stable.

Additionally, to save the state data for devices, the hackfest team used Azure SQL Database. It has an advantage in that developers can easily handle data with a practiced SQL statement, PaaS-based database as a service, and stored procedure.

We went through the following procedures by using these development/testing tools:

- Postman tool, as a simulating client device, sent JSON data with RESTful, POST method to ASP.NET Web API working on Web Apps. This API public test code is built with a GET method.

- Web Apps receives data and relays to Azure Machine Learning to receive real-time prediction responses.

- Received responses are stored on Azure Table storage as log data and web app code and executes Insert Entity method.

- Assuming data is received by web app, insert Table Storage Partition Key and RowKey as user ID and GUID. The most important predicted data from Azure Machine Learning will be stored as-is on Table storage property.

- For Azure Table storage dev/testing purpose, use Azure Storage Explorer Tool.

- Save predicted results from Azure Machine Learning on Azure SQL Database.

Code repository link: https://github.com/m-duel-project/frontend-realtime

The following is a Web API code snippet.

// GET api/values

public IEnumerable<string> Get()

{

// azure ml rre start

// InvokeRequestResponseService().Wait();

string result = HttpPostRequestResponseService();

// azure table storage start

// InsertEntity();

// sql database insert

// SqlWrite();

return new string[] { result };

}

Code - calling Azure Machine Learning.

public static string HttpPostRequestResponseService()

{

var request = (HttpWebRequest)WebRequest.Create(

//"http://requestb.in/z2ewgtz2"

"https://asiasoutheast.services.azureml.net/<API_ADDRESS>"

);

var postData = "{\"Inputs\":{\"input1\":{\"ColumnNames\":[\"idx\",\"Age\",\"NumPromotion\",\"idx\",\"AvgPlayMinDay\",\"90DaysItemPurchaseCount\",\"GameLevelRange\",\"Crystals\",\"PromotionPath\",\"Race\",\"Gender\",\"RegCode\",\"PurchaseNum\",\"LogonCountPerWeek\",\"Country\",\"ChurnYN\"],\"Values\":[[\"0\",\"0\",\"0\",\"0\",\"0\",\"0\",\"0\",\"0\",\"value\",\"value\",\"value\",\"0\",\"0\",\"0\",\"value\",\"value\"],[\"0\",\"0\",\"0\",\"0\",\"0\",\"0\",\"0\",\"0\",\"value\",\"value\",\"value\",\"0\",\"0\",\"0\",\"value\",\"value\"]]}},\"GlobalParameters\":{}}";

var data = Encoding.UTF8.GetBytes(postData);

request.Method = "POST";

request.Headers[HttpRequestHeader.Authorization] =

"<Bearer_API_KEY>";

request.ContentType = "application/json; charset=utf-8";

request.ContentLength = data.Length;

using (var stream = request.GetRequestStream())

{

stream.Write(data, 0, data.Length);

}

HttpWebResponse response;

try {

response = (HttpWebResponse)request.GetResponse();

}

catch (Exception e)

{

return e.ToString();

}

var responseString = new StreamReader(response.GetResponseStream()).ReadToEnd();

return responseString;

}

Code - store predicted data from Azure Machine Learning to Azure Table storage.

public static void InsertEntity()

{

CloudStorageAccount storageAccount = CloudStorageAccount.Parse(

CloudConfigurationManager.GetSetting("StorageConnectionString"));

CloudTableClient tableClient = storageAccount.CreateCloudTableClient();

CloudTable table = tableClient.GetTableReference("GameLog");

CustomerEntity customer1 = new CustomerEntity("test", "data");

// add more code data here

TableOperation insertOperation = TableOperation.Insert(customer1);

// execute entity insert opertation

table.Execute(insertOperation);

}

While implementing real-time prediction requests, the hackfest team encountered the following error and fixed it accordingly.

Azure Machine Learning real-time request fail and debugging

Code from ASP.NET Web API to Azure Machine Learning asynchronous code failed with an HTTP 400 error. The hackfest team turned on the Azure Machine Learning log, but had difficulty finding out a root cause. The team tried HttpWebRequest instead of HttpClient object, but received the same HTTP 400 error.

To debug this error, the team compared normal requests with HTTP 400 error requests on an HTTP inspection tool.

Through the inspection, the team found that Korean text data columns were broken. The team changed the POST body to UTF-8 format explicitly and not ASCII format, which resolved the problem.

Azure Functions app – Machine Learning batch analytics

There are many ways to implement a scheduler task on Microsoft Azure—Azure Functions app, Azure WebJobs, Azure Scheduler, and Quartz framework on a virtual machine.

During the hackfest, the team reviewed scheduler services and chose the Azure Functions app for serverless coding, batch analytics blob, timer, and webhook trigger to enable the team to focus on the code itself.

We went through the following procedures:

- We assume that data to be analyzed by batch prediction is generated automatically or manually from the game admin website.

- We assume that when the game admin executes batch analytics, a JSON format file will be uploaded on an Azure Blob storage “input” container, either on-demand or periodically.

- Azure Functions app, the back-end processor, reads the file from Azure Blob storage on an upload trigger and can be executed by webhook, if necessary.

- Azure Functions app receives the file on Blob storage via batch responses from Azure Machine Learning.

- The results received from Azure Machine Learning are saved on Blob storage “output” container.

- By triggering of Blob storage “output,” the Azure Functions app saves batch results to Azure SQL Database.

Azure Functions app code implementation

Code built with two Azure Functions apps and executed with BlobTrigger.

- FirstTrigger: monitoring input path as BlobTrigger → build “batch job” and start with “job id”

#r "Microsoft.WindowsAzure.Storage"

using System;

using System.Threading.Tasks;

using System.Net.Http;

using System.Net.Http.Headers;

using Microsoft.WindowsAzure.Storage;

using Microsoft.WindowsAzure.Storage.Auth;

using Microsoft.WindowsAzure.Storage.Blob;

public class AzureBlobDataReference

{

// Storage connection string used for regular blobs. It has the following format:

// DefaultEndpointsProtocol=https;AccountName=ACCOUNT_NAME;AccountKey=ACCOUNT_KEY

// It's not used for shared access signature blobs.

public string ConnectionString { get; set; }

// Relative uri for the blob, used for regular blobs as well as shared access

// signature blobs.

public string RelativeLocation { get; set; }

// Base url, only used for shared access signature blobs.

public string BaseLocation { get; set; }

// Shared access signature, only used for shared access signature blobs.

public string SasBlobToken { get; set; }

}

public enum BatchScoreStatusCode

{

NotStarted,

Running,

Failed,

Cancelled,

Finished

}

public class BatchScoreStatus

{

// Status code for the batch scoring job

public BatchScoreStatusCode StatusCode { get; set; }

// Locations for the potential multiple batch scoring outputs

public IDictionary<string, AzureBlobDataReference> Results { get; set; }

// Error details, if any

public string Details { get; set; }

}

public class BatchExecutionRequest

{

public IDictionary<string, AzureBlobDataReference> Inputs { get; set; }

public IDictionary<string, string> GlobalParameters { get; set; }

// Locations for the potential multiple batch scoring outputs

public IDictionary<string, AzureBlobDataReference> Outputs { get; set; }

}

static async Task WriteFailedResponse(HttpResponseMessage response, TraceWriter log)

{

log.Info(string.Format("The request failed with status code: {0}", response.StatusCode));

// Print the headers - they include the requert ID and the timestamp, which are useful for debugging the failure

log.Info(response.Headers.ToString());

string responseContent = await response.Content.ReadAsStringAsync();

log.Info(responseContent);

}

static async Task RunBatch(TraceWriter log)

{

string storageAccountName = "<ACCOUNT_NAME>";

string storageAccountKey = "<ACCOUNT_KEY>";

string storageConnectionString = string.Format("DefaultEndpointsProtocol=https;AccountName={0};AccountKey={1}", storageAccountName, storageAccountKey);

string storageContainerName = "input";

string storageOutputContainerName = "output";

using (HttpClient client = new HttpClient())

{

var request = new BatchExecutionRequest()

{

Inputs = new Dictionary<string, AzureBlobDataReference>()

{

{

"input1",

new AzureBlobDataReference()

{

ConnectionString = storageConnectionString,

RelativeLocation = string.Format("{0}/game_data_utf_8.csv", storageContainerName)

}

},

},

Outputs = new Dictionary<string, AzureBlobDataReference>()

{

{

"output1",

new AzureBlobDataReference()

{

ConnectionString = storageConnectionString,

RelativeLocation = string.Format("/{0}/output1results.csv", storageOutputContainerName)

}

},

},

GlobalParameters = new Dictionary<string, string>() { }

};

string apiKey = "<API_KEY>";

client.DefaultRequestHeaders.Authorization = new AuthenticationHeaderValue("Bearer", apiKey);

// WARNING: The 'await' statement below can result in a deadlock if you are calling this code from the UI thread of an ASP.Net application.

// One way to address this would be to call ConfigureAwait(false) so that the execution does not attempt to resume on the original context.

// For instance, replace code such as:

// result = await DoSomeTask()

// with the following:

// result = await DoSomeTask().ConfigureAwait(false)

log.Info("Submitting the job...");

// submit the job

string BaseUrl = "https://ussouthcentral.services.azureml.net/<BATCH_URL>/jobs";

var response = await client.PostAsJsonAsync(BaseUrl + "?api-version=2.0", request);

if (!response.IsSuccessStatusCode)

{

await WriteFailedResponse(response, log);

return;

}

string jobId = await response.Content.ReadAsAsync<string>();

log.Info(string.Format("Job ID: {0}", jobId));

// start the job

Console.WriteLine("Starting the job...");

response = await client.PostAsync(BaseUrl + "/" + jobId + "/start?api-version=2.0", null);

if (!response.IsSuccessStatusCode)

{

await WriteFailedResponse(response, log);

return;

}

}

}

public static void Run(string myBlob, TraceWriter log)

{

//log.Info($"C# Blob trigger function processed: {myBlob}");

RunBatch(log).Wait();

}

- SecondTrigger: monitoring output path as BlobTrigger → Insert output blob to Azure SQL Database table

#r "System.Data"

using System;

using System.Data.SqlClient;

public static void Run(string myBlob, TraceWriter log)

{

log.Info("C# Blob trigger function processed");

using (var connection = new SqlConnection(

"Server=tcp:<SQL_SERVER>.database.windows.net,1433;Initial Catalog=<DBNAME>;Persist Security Info=False;User ID=<LOGON_NAME>;Password=<LOGON_PWD>;MultipleActiveResultSets=False;Encrypt=True;TrustServerCertificate=False;Connection Timeout=30;"

))

{

connection.Open();

using (connection)

{

SqlCommand cmd = new SqlCommand();

cmd.Connection = connection;

cmd.CommandText = "INSERT INTO batch(blob) VALUES(@blob)"; // insert some data on Azure SQL Database

cmd.Parameters.AddWithValue("@blob", myBlob);

cmd.ExecuteNonQuery();

}

log.Info("Connected successfully.");

}

}

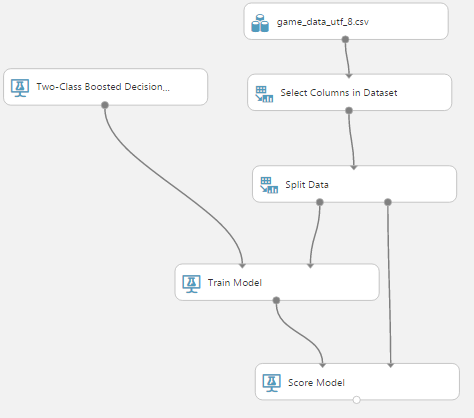

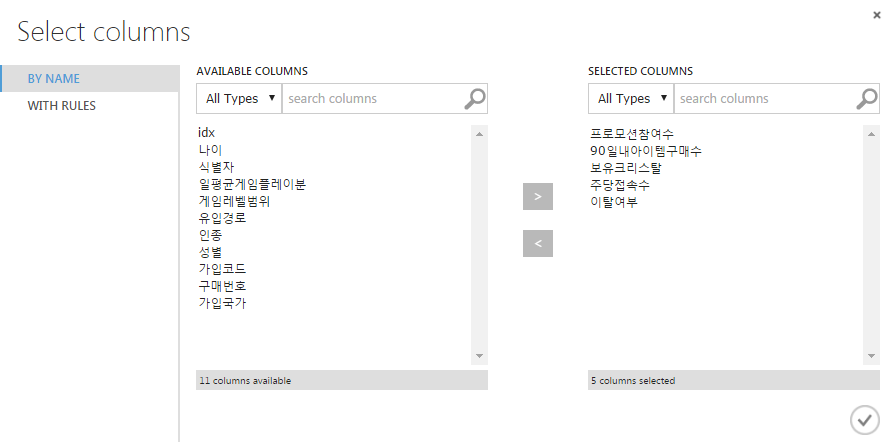

Implement in-game Machine Learning

During the hackfest, our team implemented Game User Churn Prediction and Game User Item Suggestion with Azure Machine Learning. In addition, we also prototyped predicting analysis processes into the in-game client to successfully perform TCG card deck suggestion and improve the game AI pattern.

The hackfest team studied unsupervised learning, and tried most usable R algorithms. The objective was to call R function in C# and to test various R algorithms. To test such tasks, the hackfest team built an Iris data model and built in-game Iris data prediction code.

Iris data link repository: https://github.com/m-duel-project/ml-data

Code link repository: https://github.com/m-duel-project/in-game-rl

###################################

##### Logistic Regression Analytics #####

###################################

#install.packages("glm2")

library(glm2)

# select 2 target category variables

adj_iris <- iris

adj_iris$Species <- as.character(adj_iris$Species)

adj_iris <- subset(adj_iris, Species %in% c('versicolor', 'virginica'))

adj_iris$Species <- as.factor(adj_iris$Species)

set.seed(10)

# train / test split

ind <- sample(2, nrow(adj_iris), replace=TRUE, prob=c(0.7,0.3))

trainData <- adj_iris[ind==1, ]

testData <- adj_iris[ind==2, ]

# modeling

formula <- Species ~ Sepal.Length + Sepal.Width + Petal.Length + Petal.Width

iris_logit <- glm(formula, data=trainData, family=binomial())

# save modeling result as .rds

flower_func_logit =

function(sl, sw, pl, pw){

testData <- data.frame(Sepal.Length=sl, Sepal.Width=sw,

Petal.Length=pl, Petal.Width=pw)

logitpred_a <- predict(iris_logit, newdata=testData, type="response")

logitpred <- ifelse(logitpred_a>=0.5, "virginica", "versicolor")

logitpred

}

save(iris_logit, flower_func_logit, file = "c:/Users/hcs64648/Desktop/iris_logit.RData")

The team built logistic regression analytics for predicting RData function and tried calling in C# and other various codes.

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using System.Threading.Tasks;

using RDotNet;

namespace ConsoleApplication1

{

class Program

{

static void Main(string[] args)

{

REngine.SetEnvironmentVariables();

REngine engine = REngine.GetInstance();

engine.Initialize();

engine.Evaluate("load('c:/Users/hcs64648/Desktop/iris_logit.RData')");

//var iris_logit = engine.GetSymbol("iris_logit");

//var flower_func_logit = engine.GetSymbol("flower_func_logit");

var rv = engine.Evaluate("flower_func_logit(1,1,1,1)"); // prediction here

Console.WriteLine(rv.ToString());

engine.Dispose();

}

}

}

They could execute R code and load RData function by using RDotNet project.

R.NET project: https://www.nuget.org/packages/R.NET.Community/1.6.5

R code can be interop-called in C#, C++ or LUA. In this way, the Nexon Mabinogi team is building extensive knowledge about Machine Learning-related technologies and implementing advanced analytics code in the game.

Nexon’s issue is a comprehensive scenario, which most mobile game companies are encountering—to gain more revenue from their service with scalable and data scientific methods.

Conclusion

During the hackfest, Nexon developed real-time predicted analytics and batch predict analysis according to the solution architecture diagram.

Nexon originally planned to establish an AWS IaaS front end with AWS Machine Learning as a back end or AWS IaaS with Tensorflow, Python Pandas libraries, and scikit-learn machine learning toolkits.

But by running the hackfest, Nexon successfully built a RESTful API front end with Web Apps with the added value of scalability and reduced management points, which keeps them focused on the code itself.

Also, serverless, easy code—C# script and Node.js—was a “wow” factor for the Nexon hackfest developers because they could build an event trigger without a single line of code and just build the back-end batch analytics logic code on Azure Functions app.

“Machine Learning is a hot issue in the mobile and online gaming industry and beyond. So Nexon DevCat HQ was prepared in various ways to adopt it. Through the Microsoft three-day hackfest, with a full utilization of Web Apps and Azure Machine Learning for Azure PaaS, we could develop a perfect prototype by concentrating on an immediate predictive analytics service.

“The hackfest has been a great help for us to be able to build fundamental technologies for AI development, which makes smarter and more actions available. In the process, Machine Learning predictive analytics technologies were applied with counsel from a Microsoft partner, DS-eTrade. The Nexon, DS-eTrade, and Microsoft developers’ impassioned participation in the hackfest motivated everyone in the room. Since we believe a hackfest is the best way to collaborate and review new technologies, we plan to actively adopt it.”

– Dong-Geon Kim, Director of Nexon DevCat HQ

General lessons

With the Web Apps feature of Azure App Service, Nexon deployed a massive RESTful API front end that hosts various OSS languages and frameworks instantly. The Nexon developers were satisfied with the fast development focusing only on code itself. Also, they could build infrastructure not only on a previous IaaS virtual machine but on PaaS - App Service as well, with continuous deployment and staging environments during the hackfest.

Additionally, to accomplish massive batch analytics based on Azure Machine Learning, the developers had to build a batch trigger that could check the processing and updating status on legacy DBMS. With regard to that task, Nexon had to build their own scheduler process daemon with polling method. However, the Azure Functions app changed everything that triggers the timer, the blob container, and various other objects.

Since the Azure Functions app can trigger the blob container, Nexon just uploaded massive predictive analytics data on the blob container from their legacy admin tool. When the batch task ends, events could be triggered by Azure Functions for update status and legacy monitoring tools. Without any infrastructure building, polling process or trigger daemon process, they only need to write code with their preferred development language.

The predicted analytics are expected to be settled on various industries in the near future. Building real-time prediction service with Azure App Service and batch predictive analytics task scheduler process with Azure Functions app will become comprehensive solutions and the best practice for predictive analytics solution scenarios.